#Memory dim3 software

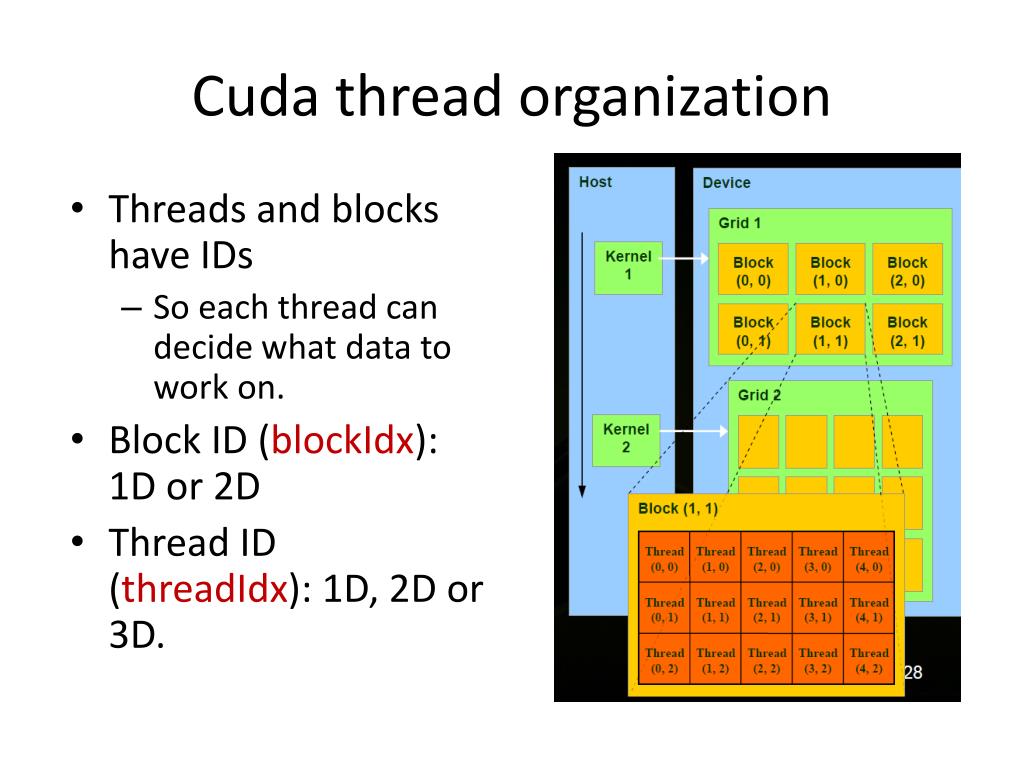

In CUDA there is a hierarchy of threads in software which mimics how thread processors are grouped on the GPU. The information between the triple chevrons is the execution configuration, which dictates how many device threads execute the kernel in parallel. Int i = blockIdx.x*blockDim.x + threadIdx.x ĬudaMemcpy(y, d_y, N*sizeof(float), cudaMemcpyDeviceToHost) Void saxpy(int n, float a, float *x, float *y) In this post I will dissect a more complete version of the CUDA C SAXPY, explaining in detail what is done and why. SAXPY stands for “Single-precision A*X Plus Y”, and is a good “hello world” example for parallel computation. In a recent post, I illustrated Six Ways to SAXPY, which includes a CUDA C version. Keeping this sequence of operations in mind, let’s look at a CUDA C example. Transfer results from the device to the host.Transfer data from the host to the device.Declare and allocate host and device memory.Given the heterogeneous nature of the CUDA programming model, a typical sequence of operations for a CUDA C program is: These kernels are executed by many GPU threads in parallel. Code run on the host can manage memory on both the host and device, and also launches kernels which are functions executed on the device.

In CUDA, the host refers to the CPU and its memory, while the device refers to the GPU and its memory. The CUDA programming model is a heterogeneous model in which both the CPU and GPU are used. CUDA Programming Model Basicsīefore we jump into CUDA C code, those new to CUDA will benefit from a basic description of the CUDA programming model and some of the terminology used. CUDA C is essentially C/C++ with a few extensions that allow one to execute functions on the GPU using many threads in parallel.

From here on unless I state otherwise, I will use the term “CUDA C” as shorthand for “CUDA C and C++”. These two series will cover the basic concepts of parallel computing on the CUDA platform. We will be running a parallel series of posts about CUDA Fortran targeted at Fortran programmers. This series of posts assumes familiarity with programming in C. This post is the first in a series on CUDA C and C++, which is the C/C++ interface to the CUDA parallel computing platform.

#Memory dim3 update

Update (January 2017): Check out a new, even easier introduction to CUDA!

0 kommentar(er)

0 kommentar(er)